Despite its size, Contiki a complex system with multiple layers of interrupts, processes, protothreads, serial port input and output functions, radio device drivers, power-saving duty cycling mechanisms, medium access control protocols, multiple network stacks, fragmentation techniques, self-healing network routing protocols, best-effort and reliable communication abstractions, and Internet application protocols. These run on a wide range of different microprocessor architectures, hardware devices, and is compiled with a variety of C compilers.

Typical Contiki systems also have extreme memory constraints and form large, unreliable wireless networks. How can we ensure that Contiki, with all these challenges, does what it is supposed to do?

Over the years, open source projects have tried different ways to ensure that the code always is stable across multiple platforms. A common approach has been to ask people to test the code on their own favorite hardware in good time before a release. This was the approach that Contiki took a few years ago. But the problem was that it is really hard to get good test coverage, particularly for systems that are inherently networked. Most testers won't have access to large numbers of nodes and even if they have, tests are difficult to set up because of the size of networks that are needed for testing. Also, since people are more motivated to run tests near a release, there may potentially be large numbers of bugs that are found right before the release. It would be great to be able to find those bugs much earlier.

Many projects do nightly builds to ensure that the source code is kept sane. This is something we have done for a long time in Contiki: the code has been compiled with 5 different C compilers for 12 platforms. But this is not enough to catch problems with code correctness, as the functionality of the system is not tested. Testing the functionality is much more difficult, since it requires us to actually run the code.

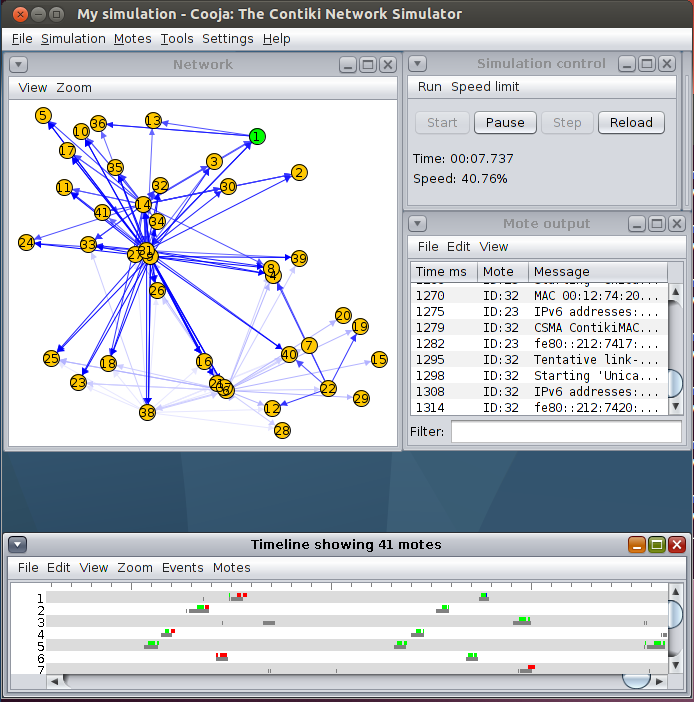

Fortunately, Contiki provides a way to run automated tests in large networks with a fine-grained level of detail: Cooja, the Contiki network simulator. But taking this to a full regression test framework took a bit of work.

First, to make scripted simulation setups easier, Cooja author Fredrik Österlind wrote a test script framework for Cooja. Second, Github contributors Rémy Léone and Ilya Dmitrichenko developed a travis plugin for Contiki. And now Contiki gets a new regression test framework from Thingsquare Mist.

|

| Cooja, the Contiki network simulator |

Cooja simulate networks of nodes, where each node can be a highly detailed emulator of a specific hardware device. Thanks to mspsim and avrora, Cooja can emulate the TI MSP430 CPU, with a recent addition of the MSP430x architecture, and the Atmel AVR. Nodes can also run non-emulated Contiki code compiled for the native platform, typically the x86.

With Cooja, the regression test framework, and travis, can now run regression tests on every single commit done to the repository. We spot problems early in the development process; even before the code gets into the repository. Although regression tests do not provide a full certainty that the code works in all situations, it does help us spot showstoppers. This lets us all sleep well at night, knowing that any new code in the system is not only reviewed by the Contiki developers and automatically built, but that the code is automatically run in a range of different network setups, with a number of different network protocols, and on multiple platforms and processor architectures.

The regression test framework resides inside directory in the Contiki root, regression-tests/. To run the complete set of regression tests, simply run make in this directory and all tests will run. Each test prints out either OK or FAIL ಠ_ಠ to indicate success or failure of each test.

The regression-tests/ directory contains a set of subdirectories:

01-compile/The directory names start with two digits to create an explicit ordering and allow doing iterative regression testing. For example, if working on the RPL code, go into the 11-rpl/ directory and run make to check that the regression tests for RPL work, without having to run all the other tests that aren't relevant to the RPL code.

02-hello-world/

03-base/

04-rime/

05-netperf/

06-shell/

07-elfloader/

08-collect/

09-ipv4/

10-ipv6/

11-rpl/

12-ipv6-apps/

Each test directory contains a number of tests. Simulation test directories contain Cooja csc files, who's names also start with two digits. For example, the 02-hello-world/ directory contains:

MakefileThe files that start with two digits are run, in order, when running make in the directory. The file named x03-crosslevel.csc has been disabled, by putting an x in the beginning of the filename.

01-multithreading.csc

02-sky-coffee.csc

x03-crosslevel.csc

04-sky-checkpointing.csc

Some of the tests include hundreds of networked nodes, whereas others contain only a single node. For example, the multithreading and hello-world tests contain only one node each. Some of the IPv6/RPL and Rime tests contain hundreds of nodes, to test the scalability of the network protocols by checking that the behave correctly even if the number of neighbors and routes exhaust the neighbor and routing tables used by the network protocols.

A few statistics for the new regression test setup:

- Number of automated tests: 42

- Number of build platforms: 11

- Number of compiled examples: 38

- Number of C compilers used: 4

- Number of processor configurations tested: 9

- Number of processor architectures tested: 4 (AVR, MSP430, MSP430x, x86)

- Number of network stacks tested: 3 (IPv4, IPv6, Rime)

- Number of network protocols tested: 16

- Total number of network nodes used in the tests: 1021

- Average number of per-node neighbors: 252.7

Tree cool!, way to go team :)

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDelete